I am currently working on a Java project for a product that consisted of 4 monolithic deployments with high coupling caused by shared Hibernate JPA objects and database schemas. The project consists of both new services, but is also held back by its 10+ years of legacy code. The project has multiple on-premise deployments and each deployment stores well over 20 terabytes of data in roughly 300 tables with foreign keys spread throughout the database. In an effort to stay relevant, it was determined that one of the deployments was to be made completely independent from the others. For simplicity we can call the 3 remaining monolithic deployments Foo, while the new and improved independent deployment can be called Bar.

The Bar deployment has for a long time used two database schemas, foo and bar, while the Foo project only uses the foo schema. To acquire our goals of a completely independent Bar, two requirements have to be fulfilled:

- Bar must have its own objects and remove all dependencies to Foo

- A subset of data in the foo schema must be sent to Bar. This includes initial data and any later updates.

This article will cover our thoughts and the result of requirement 2. All discussions and decisions have been taken as a team, but the actual development of solutions were done by me. Any major blockers or changes have been brought back to the team and discussed to find the best approach to the problem.

Disclaimer: Code examples are anonymized and simplified to keep them short and understandable and cannot be used as-is. They are added to help illustrate the process. Examples of simplification are removing null checks or using Strings as integers. Though the code for the most part is valid Java, it should rather be considered Java like pseudo code.

Startup

Our starting point was that most of the development should be done in Bar and it was Bars requirements that set the standard for what changes are done, what data is sent and the required format. Necessary changes in Foo would be done, but as far as practically possible, it was Bar that was being improved. An example of changes in Foo would be moving services from Bar to Foo that directly updated the foo schema.

As for the data migration, we concluded that there were four plausible communication options: SOAP WS, REST, domain events and change data capture.

SOAP WS and REST

For a small scale project, SOAP WS or REST services may have been the optimal solution as they are relatively easy to set up and use. Foo could update its current services to send requests and Bar could create endpoints to receive requests. Unfortunately, this was not a small scale project and would require identifying and rewriting over 100 services and their individual methods in Foo and creating approximately the same amount of endpoints in Bar to process the data. This would also create a 1-to-1 dependency between the sending service in Foo and the receiving endpoint in Bar.

Domain events

With the project scale in thought, many would automatically conclude that data should be forwarded from Foo to Bar using domain events and Kafka or another messaging service. In many ways, this would solve all our problems of needing to move data from Foo to Bar. Domain events could be processed in a more generic way so the 1-to-1 dependency would be removed. This would also allow new services to subscribe to changes. Alas this would still require identifying and rewriting over 100 services in Foo so they could send domain events.

Change data capture

As you may have concluded from the title or abstract, we landed on a change data capture (CDC) solution. Identifying the foo tables that Bar had a dependency on required little effort, so the focus became simply moving the data. Many CDC solutions exist, but as we have several on-premise deployments that aren’t exposed on the internet, online CDC services were automatically disqualified. Another caveat is that both Foo and Bar support Oracle DB and PostgreSQL. Any CDC service would have to support both databases as we did not want to create two separate solutions. After many discussions regarding the requirements of a CDC solution we concluded that either connecting to Hibernates event system or using Debezium would suffice.

First draft — Hibernate

Hibernate is a popular Java ORM framework and has the possibility to listen to events during and after a database transaction has occurred.

As Foo already used Hibernate, we assumed that using the Hibernate event system would be a simple task. The assumption was that we know which tables Bar has a dependency on and therefor can listen to changes in relevant objects. This would allow for an extension of Foo and we would not have to create a new deployment. Thus I started out on my journey to write my first CDC solution based on Hibernate.

A first draft was born:

HibernateCDCEventListener.java hosted with ❤ by GitHub

Initial issues

For the trained eye, it is already possible to spot potential issues with extending this solution. First off is the already growing switch case found in createDataEvent(Object, Set<String>) that initially only supported 6 different object types. Unfortunately it was not possible to update the classes to give them a common ancestor or interface and make use of Javas support for polymorphism. This resulted in an ever growing switch case that had to delegate handling of each object type.

As for functionality, this code did not meet the requirements of always processing the event before the database transaction was committed. With over 300.000 lines of code in the Foo project that would have to be debugged to find the source of the faulty events, Hibernate events earlier in the process were used.

Second attempt

With some experimentation, the PERSIST, DELETE, MERGE and FLUSH_ENTITY events seemed to fulfill the necessary requirements regarding transactions. The only problem was that by now the switch case had grown to 13 separate cases with no end in sight.

Another problem was the complexity of objects. Many JPA objects had relations to other JPA objects recursively. This complicated the process of finding the correct object to process upon receiving events and prolonged simple jobs like sending updates for a new table.

Tactical retreat

After spending a total of 21 work days on the monstrosity that was battling several years of legacy code and misunderstood Hibernate practices, we finally threw in the towel and concluded that this was not the optimal solution.

The positive sides of using Hibernate no longer outweighed the negative sides and Debezium was once again considered. Among reasons to abandon the Hibernate solution was the finding of a few select places that updated the database outside of the JPA context, lost support for sending updates when editing directly in the database and not to mention the growing complexity. A final reason to abandon Hibernate was that existing solution would require a separate tool to migrate existing data before the CDC solution continued to keep Bar updated.

Had this been a smaller project that had used Hibernate correctly for its entire lifetime with simpler object relations then it might have worked, but this was not the case with Foo and Bar.

Second draft — Debezium

Back at the drawing table, our other option was Debezium. Debezium is a CDC library that reads changes directly from the databases write-ahead log (WAL). The library supports several databases, can migrate all data in relevant tables and continue to read events from where it left off should it be restarted. It is also possible to implement several Debezium interfaces to create custom logic, e.g. how to store the current position in the WAL. This means that we could create a single CDC solution that both migrates existing data, sends updates and should it be shutdown then it can continue from where it left off given that the WAL has not been overwritten.

Debezium could solve our problem in two ways; either using the Debezium Engine and implementing our own logic or using the full Debezium platform with Kafka, Kafka Connectors and ZooKeeper. Using the full Debezium platform was not an option for two reasons.

- This would require on-premise environments to support new infrastructure services, i.e. Kafka and ZooKeeper.

- We would still have to implement logic to gather necessary data based on the events since it usually is split across multiple tables. This was a reoccurring issue as data from most tables did not make sense on their own. More on this in the chapter about data extraction.

Based on our previous findings we decided on using the Debezium Engine directly and handling events as they are published. The code would have to handle errors and guarantee that events are sent at least once. Debezium would otherwise seemingly support all our requirements including direct edits in the database. To guarantee uptime and to reduce complexity, a separate deployment was favorable. Also, to simplify development, PostgreSQL was prioritized and Oracle DB was put on the back burner. Thus leading me to my second time implementing a CDC solution, this time based on Debezium.

Prerequisites

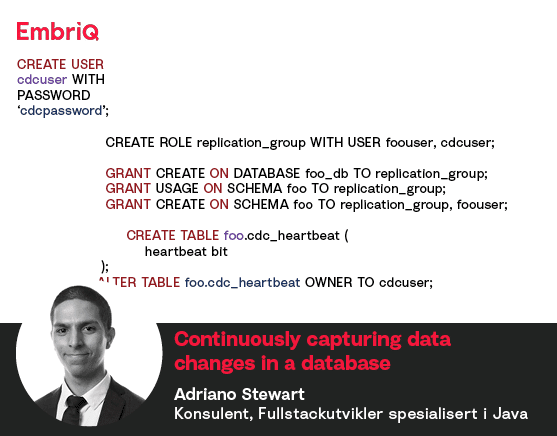

There are a few prerequisites for Debezium to work against a database. Without going into to much details of why the changes are done, here is a complete script that gives the Debezium user correct access to the PostgreSQL database along with the heartbeat table that I used.

view rawcdcSetup.sql hosted with ❤ by GitHub

The heartbeat table is used when running tests to force Debezium to stay up to date within a reasonable time, in our case less than 50ms. During normal execution, a heartbeat might not be necessary.

In addition to the previous setup, replication_group must also be granted ownership over all tables that are to be monitored and the WAL level must be set to logical.

sed -i ’s|#wal_level = replica|wal_level = logical|g’ var/lib/postgresql/data/postgresql.conf

walLevel.sh hosted with ❤ by GitHub

The WAL level can be confirmed by executing show WAL_LEVEL in e.g. psql.

Given the correct configuration of Debezium, it will create a publication for the monitored tables and process updates.

Hello world… again

Debezium is, contrary to Hibernate, well documented. There are a few places where the wording can be confusing, but they have in general managed to document all important features well enough.

This is an example of using the Debezium engine, Spring-boot and supporting PostgreSQL:

DebeziumConnector.java hosted with ❤ by GitHub

Launcher.java hosted with ❤ by GitHub

Initial findings

You can immediately see that this code is simpler than the Hibernate version. It requires a little more setup, but the logic is not as complicated. Apart from unfamiliar Debezium classes and properties, the setup should be easy for anyone to understand.

The only thing worth noting is how beans and properties are added. Normal best practice would be to set @Autowired and @Value(”${…}”) directly above the field. In most cases this would be sufficient and default values could be added. In our case, the Debezium CDC solution needed to work as both a standalone application and as a library to be used in tests and in most cases, no default values could be used. By setting these annotations on the setters, the standalone application will start as any other Spring-boot application. When it is used as a library in a Spring project without component scanning, each field can be set manually and the user of the library isn’t forced to have identical properties in their property files.

Handling events

As seen in the initial version of DebeziumConnector.java, an event handler is needed. This can either be a Consumer<ChangeEvent<T, T>> or Debeziums ChangeConsumer<ChangeEvent<T, T>>. The first is a standard Java Consumer that handles single events while the latter is for batch handling events. This guide will only show the first version, but the principals in each are the same. The only thing to remember when handling events in batches is that each event must be committed so Debezium knows that the event has been properly processed. In my case, T is a String as the Debezium engine is configured to send JSON.

Here is the event handler and a helper class:

CdcEvent.java hosted with ❤ by GitHub

SingleEventHandler.java hosted with ❤ by GitHub

The above code is all that is needed to process an event. In fact, most of it can be removed if you only need data from the table that is changed which should be the case for most CDC solutions. In Foo and Bars case, extra data is required.

The flow of the code can be followed from accept(ChangeEvent<String, String>) and can be summarized in three steps:

- Extract the ID of the row that is edited, along with the table name

- Call the correct data extractor based on the table name.

- Export the extracted data

Data extractors

Since we had to extract data based on several tables, I created individual data extractors that each inherited from the abstract DataExtractor class.

DataExtractor.java hosted with ❤ by GitHub

FooExtractor.java hosted with ❤ by GitHub

Due to component scanning being enabled, I can simply add a table to the allTables String in DebeziumConnector.java and a new corresponding DataExtractor implementation if I wish to send data triggered by changes in more tables.

You might read this and think:

“Are you truly processing the database history if you select from the database at a later point in time?”

This is a correct assumption and in most cases this would be considered an anti-pattern as the changes in the WAL are ignored. The CDC solution could in theory be shutdown for a day while the database still receives updates. When the CDC solution then is turned on, it would resume from where it left off in the WAL, processing day old events and extracting more recent edits in the database.

But there are no rules without exceptions. In Bars case, most changes only need the latest version regardless of how many times it has been edited and a history is not necessary. In the few cases where history is needed, data is already stored in immutable rows so simply sending the row will send the history at the given time. With the given data structure and requirements, the CDC solution is simply a trigger to extract and send the necessary data from Foo to Bar.

Data exporter

The final piece of the puzzle is exporting the data. This was solved with a simple interface to allow for easy replacement should we decide to switch technologies and the initial implementation was a JMS solution. A similar implementation was written for tests where changes were written directly to the database instead of going through a queue, thus avoiding extra delays.

DataExporter.java hosted with ❤ by GitHub

JmsExporter.java hosted with ❤ by GitHub

Conclusion

Though the configuration of the database might be new and unknown for many, Debezium in its self is pretty simple to use. Compared to Hibernate, I have managed to produce a much more effective solution that covers more of our requirements, including requirements that Hibernate could not fulfill in half the time, 10.5 work days to be exact. The solution is not complete yet, but the progress and simplicity is promising given that I am further in creating a complete CDC solution than I ever was with Hibernate.